Article: Deep learning in five and a half minutes

For decades, algorithms engineers have been trying to make computers “see” as well as we do. That’s no small feat: though today’s smartphone cameras provide about the same high-resolution image sensing ability as the human eye—seven megapixels or so—the computer that processes that data is nowhere near a match for the human brain. Consider that roughly half the neurons in the human cortex are devoted to visual processing, and it’s no surprise it’s a pretty hard task for a computer too. Algorithm engineers kept tackling the problem for decades, developing increasingly sophisticated algorithms to help computer vision inch its way forward.

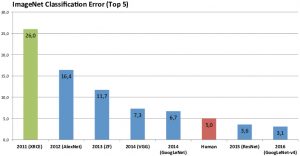

In 2012, Alex Krizhevsky and his team fed labeled ImageNet pictures into powerful GPUs to train a new deep learning neural network they called AlexNet. Instead of computer scientists trying to figure out by hand which features to look for in an image, AlexNet figured out what to look for itself. AlexNet entered the ILSVRC and beat the whole field by a whopping 41% margin. Since 2012, every algorithm that’s won the ILSVRC has been based on the same principles.

Show report: Embedded Vision Summit

There were more speakers, with 90 presentations across six tracks, one more track than last year. I recommend going through the slides, although there’s quite a few. All the presentations are available for download on the Alliance’s website. Registered users can login and download the proceedings (242MB) or watch presentation videos. Not registered yet? Go ahead here, it’s free. Our talk from last year on computer-vision-based 360-degree video systems is still available too.

BDTI’s InsideDSP: videantis adds deep learning core, toolset

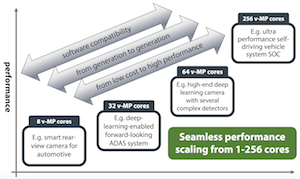

Germany-based processor IP provider videantis, launched in 2004, was one of the first of what has since become a plethora of vision processor suppliers. The company’s latest fifth-generation v-MP6000UDX product line, anchored by an enhanced v-MP (media processor) core, is tailored for the deep learning algorithms that are rapidly becoming the dominant approach to implementing visual perception. Yet it’s still capable of handling the traditional computer vision processing functions that were the hallmark of its v-MP4000HDX precursor.

Pssst – we’re still hiring!

We’ve added several people to our team in the past couple of months but are still looking for additional stellar hardware and software engineers to add to our staff at our headquarters in Hannover, Germany. Do you think you can keep up with the pace of the rest of our team? Interested in taking on a challenge? Do you have experience in deep learning, embedded processing, low power parallel architectures, or performance optimization? We’d love to hear from you.

See our open positions and shoot us a message.

Industry news

Deep learning figured out Rubik’s Cube all by itself

Yet another bastion of human skill and intelligence has fallen to the onslaught of the machines. A new kind of deep-learning machine has taught itself to solve a Rubik’s Cube without any human assistance. The milestone is significant because the new approach tackles an important problem in computer science—how to solve complex problems when help is minimal. “Our algorithm is able to solve 100% of randomly scrambled cubes while achieving a median solve length of 30 moves—less than or equal to solvers that employ human domain knowledge,” say McAleer and co. Read the article

With 3D sensing, the smartphone industry is more innovative than ever

Yole Développement published their latest report on 3D sensing. With the introduction of the iPhone X in September 2017, Apple set the standard for technology and use-case for 3D sensing in consumer. Yole expects the global 3D imaging & sensing market to expand from $2.1B in 2017 to $18.5B in 2023, at a 44% CAGR. Along with consumer, automotive, industrial, and other high-end markets will also experience a double-digit growth pattern. Read the article

Upcoming events

| IBC | 13–18 Sept, the Netherlands | IBC is the world’s most influential media, entertainment and technology show with more than 50,000 attendees. |

| AutoSens | 17-20 Sept, Brussels, Belgium | The world’s leading technical summit for ADAS and autonomous vehicle perception technology. We’ll present our talk “How deep learning affects automotive SOC and system designs”. |

Schedule a meeting with us by sending an email to sales@videantis.com. We’re always interested in discussing your video, vision, and deep learning SOC design ideas and challenges. We look forward to talking with you!

Was this newsletter forwarded to you and you’d like to subscribe? Click here.